Posted by Kaitlin

There are lots of myths and misconceptions surrounding the subject of international SEO. I recently gave a Mozinar on this; I’d like to share the basis of that talk in written form here. Let’s first explore why international SEO is so confusing, then dive into some of the most common myths. By the end of this article, you should have a much clearer understanding of how international SEO works and how to apply the proper strategies and tactics to your website.

One common trend is the lack of clarity around the subject. Let’s dig into that:

Why is international SEO so confusing?

There are several reasons:

- Not everyone reads Google Webmaster Guidelines and has a clear understanding of how they index and rank international content.

- Guidelines vary among search engines, such as Bing, Yandex, Baidu, and Google.

- Guidelines change over time, so it’s difficult to keep up with changes and adapt your strategy accordingly.

- It’s difficult to implement best practices on your own site. There are many technical and strategic considerations that can conflict with business needs and competing priorities. This makes it hard to test and find out what works best for your site(s).

A little history

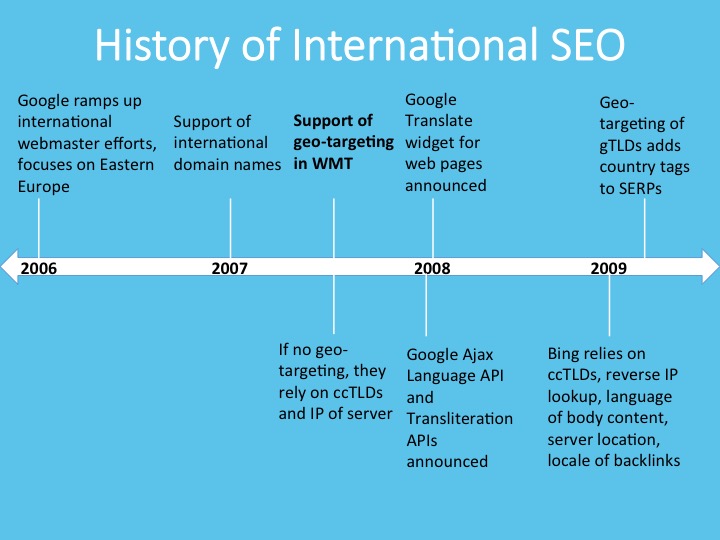

Let’s explore the reasons behind the lack of clarity on international SEO a bit further. Looking at its development over the years will help you better understand the reasons why it’s confusing, laying some groundwork for the myth-busting that is about to come. (Also, I was a history major in college, so I can’t help but think in terms of timelines.)

Please note: This timeline is constructed almost entirely on Google Webmaster blog announcements. There are a few notes in here about Bing and Yandex, but it’s mostly focused on Google and isn’t meant to be a comprehensive timeline. Mostly this is for illustrative purposes.

2006–2008

Our story begins in 2006. In 2006 and 2007, things are pretty quiet. Google makes a few announcements, the biggest being that webmasters could use geo-targeting settings within Webmaster Tools. They also clarify some of the signals they use for detecting the relevance of a page for a particular market: ccTLDs, and the IP of a server.

2009

In 2009, Bing reveals its secret sauce, which includes ccTLDs, reverse IP lookup, language of body content, server location, and location of backlinks.

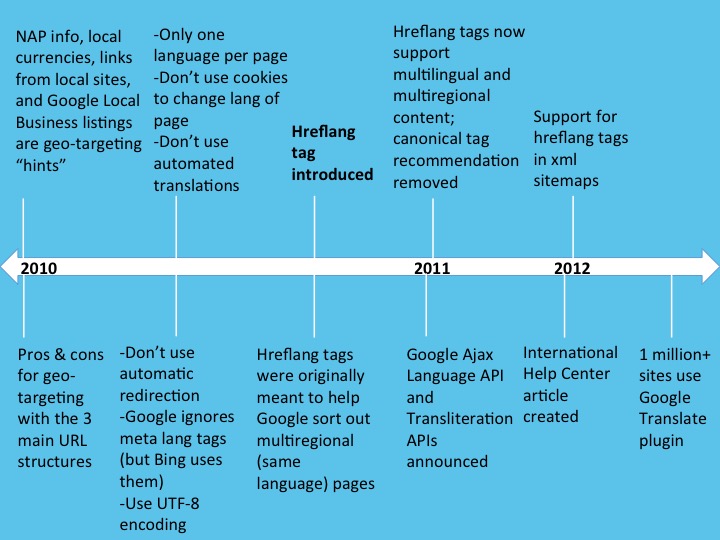

2010

In 2010, things start to get really exciting. Google reveals some of the other hints that they use to detect geo-targeting, presents the pros and cons of the main URL structures that you can use to set up your international sites, and gives loads of advice about what you should or shouldn’t do on your site. Note that just about the same time that Google says they ignore the meta language tag, Bing says that they do use that tag.

Then, in fall of 2010, hreflang tags are introduced to the world. Until this, there was no standard page-level tag to tell a search engine what country or language you were specifically targeting.

2011

Originally, hreflang tags were only meant to help Google sort out multi-regional pages (that is, pages in the same language that target different countries). Only, in 2011, Google expands hreflang tag support to work across languages as well. Also during this time, Google removes the requirement to use canonical tags in conjunction with hreflang tags, citing they want to simplify the process.

2012

Then in 2012, hreflang tags are supported in XML sitemaps (not just page tags). Also, the Google International Help Center is created, with a bunch of useful information for webmasters.

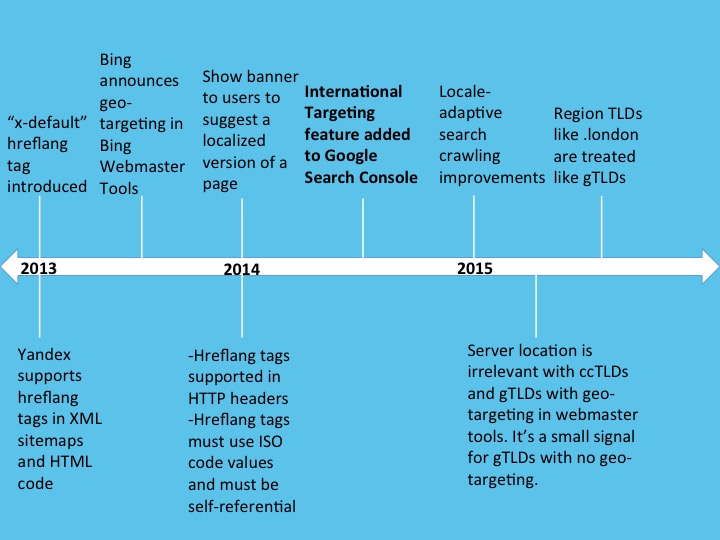

2013

In 2013, the concept of the “x-default” hreflang tag is introduced, and we learn that Yandex is also supporting hreflang tags. This same year, Bing adds geo-targeting functionality to Bing Webmaster Tools, a full 5 years after Google did.

2014

Note that it isn’t until 2014 that Google begins including hreflang tag reporting within Google Webmaster Tools. Up until that point, webmasters would have had to read about hreflang tags somewhere else to know that they exist and should be used for geo-targeting and language-targeting purposes. Hreflang tags become much more prominent after this change.

2015

In 2015, we see improvements to locale-adaptive crawling, and some clarity on the importance of server location.

To sum up, this timeline shows several trends:

- Hreflang tags were super confusing at first

- There were several iterations to improve hreflang tag recommendations between 2011 and 2013

- Hreflang tag reporting was only added to Google Search Console in 2014

- Even today, only Google and Yandex support hreflang. Bing and the other major search engines still do not.

There are good reasons for why webmasters and SEO professionals have misconceptions and questions about how best to approach international SEO.

At least 25% of hreflang tags are incorrect

Let’s look at the adoption of hreflang tags specifically. According to NerdyData, 1.7 million sites have at least one hreflang tag.

I did a quick search to find out:

438,417 sites have hreflang=“uk”

7,829 sites have hreflang=“en-uk”

Both of these tags are incorrect. The correct ISO code for the United Kingdom is actually gb, not uk. Plus, you can’t target by country alone — you have to target by language-country pairs or by language. Thus, just writing “uk” is incorrect as well.

That means at least 25% of hreflang tags are incorrect, and I only did a brief search to find a couple of the most commonly mistaken ones. You can imagine just how many sites out there are getting these hreflang values wrong.

All of this is to prove a point: the field is ripe for optimization when it comes to global SEO. Now, let’s debunk some myths!

Myth #1: I need to have multiple websites in order to rank around the world.

There’s a lot of talk about needing ccTLDs or separate websites for your international content. (A ccTLD is a country-coded top-level domain, such as example.ca, which is country-coded for Canada).

However, it is possible for your website to rank in multiple locations around the world. You don’t necessarily need multiple websites or sub-domains to rank internationally; in many cases, you can work within the confines of your current domain.

In fact, if you take a look at your analytics on your website, even if it has no geo-targeting whatsoever, chances are you already have traffic coming in from various languages and countries.

Many global brands have only one site, using subfolders for their multilingual or multi-regional content. Don’t feel that international SEO is beyond your reach because you believe it requires multiple websites. You may only need one!

The most important thing to remember when deciding whether you need separate websites is that new websites will start with zero authority. You will have to fight an uphill battle to build authority for establish and rank those new ccTLDs — and for some companies, organic traffic growth may be for many years after launching ccTLDs. Now, this is not to say that ccTLDs are not a good option. But you just need to keep this in mind that they are not the only option.

Myth #2: “The best site structure for international rankings is _________.”

There’s a lot of debate about what the best site structure is for international rankings. Is it subfolders? Subdomains? ccTLDs?

Some people swear by ccTLDs, saying that in some markets users prefer to buy from local sites, resulting in higher click-through rates. Others champion subdomains or sub-directories.

There is no one answer to the best international site structure. You can dominate using any of these options. I’ve seen websites of all site structures dominate in their verticals. However, there are certain advantages and disadvantages to each, so it’s best to research your options and decide which is best for you.

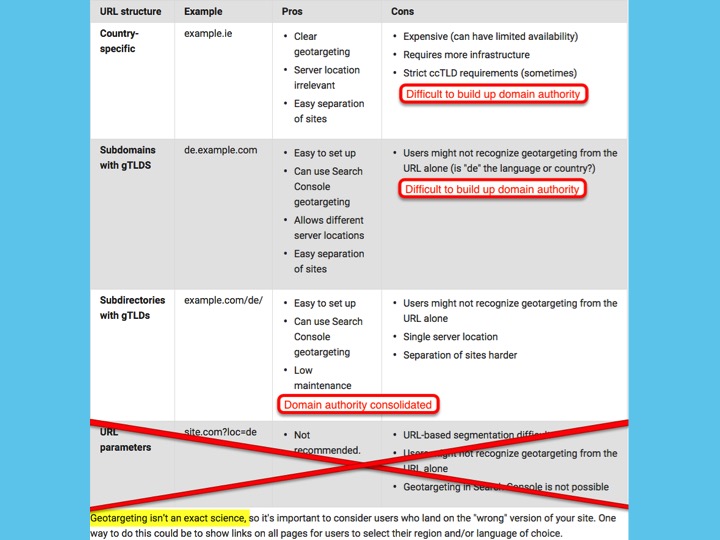

Google has published their pros and cons breakdown of the URL structures you can use for international targeting. There are 4 options listed here:

- Country-specific, aka ccTLDs

- Subdomains

- Subdirectories with gTLDs (generic top-level domains, like .com or .org)

- URL parameters. These are not recommended.

Subdirectories with gTLDs have the added benefit of consolidating domain authority, while subdomains and ccTLDs have the disadvantage of making it harder to build up domain authority. In my opinion, subdomains are the least advantageous of the 3 options because they do not have the distinct advantage of geo-targeting that ccTLDs do, and they don’t have the advantage of a consolidated backlink profile that subdirectories do.

The most important thing to think about is what’s best for your business. Consider whether you want to target at a language level or a country level. Then decide how much effort you want (or can) put behind building up domain authority to your new domains.

Or, for those who are more visual learners:

- ccTLDs are a good option if you’re Godzilla. If branding isn’t a problem for you, if you have your own PR, if building up domain authority and handling multiple domains is no big deal, then ccTLDs are a good way to go.

- Subdirectories are a good option if you’re MacGuyver. You’re able to get the job done using only what you’ve got.

- Subdomains are a good option if you’re Wallace and Gromit. Somehow, everything ends well despite the many bumps in the road.

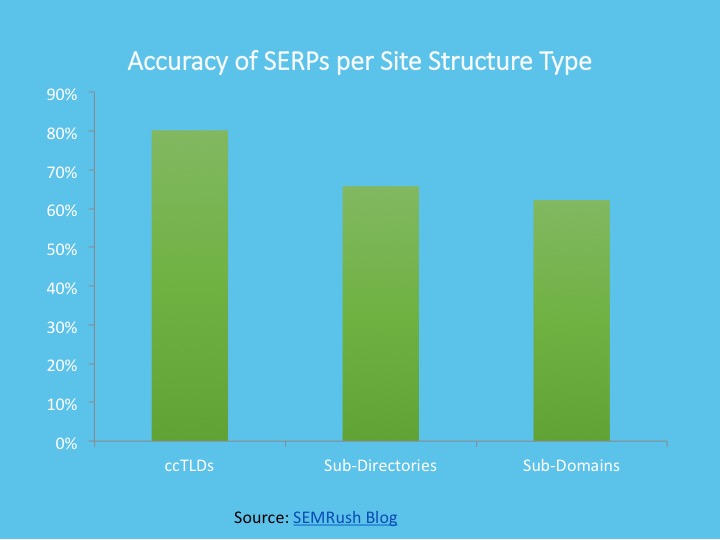

I researched the accuracy of each type of site structure. First, I looked at Google Analytics data and SEMRush data to find out what percentage of the time the correct landing page URL was ranking in the correct version of Google. I did this for 8 brands and 30 sites in total, so my sample size was small, and there are many other factors that could skew the accuracy of this data. But it’s interesting all the same. ccTLDs were the most accurate, followed by subdirectories, and then subdomains. ccTLDs can be very effective because they give very clear, unambiguous geo-targeting signals to search engines.

However, there’s no one-size-fits-all approach. You need to take a cold, hard look at your business and consider things like:

- Marketing budget you have available for each locale

- Crawl bandwidth and crawl budget available for your site

- Market research: which locales should you target?

- Costs associated with localization and site maintenance

- Site performance concerns

- Overall business objectives

As SEOs, we’re responsible for forecasting how realistically our websites will be able to grow and improve in terms of domain authority. If you believe your website can gain fantastic link authority and your team can manage the work involved in handling multiple websites, then you can consider ccTLDs (but whichever site structure you choose will be effective). But if your team will struggle under the added burden of developing and maintaining multiple (localized!) content efforts to drive traffic to your varied sites, then you need to slow down and perhaps start with subdirectories.

Myth #3: I can duplicate my website on separate ccTLDs or geo-targeted sub-folders & each will rank in their respective Googles.

This myth refers to taking a site, duplicating it exactly, and then putting it on another domain, subdomain, or subfolder for the purposes of geo-targeting.

And when I say “in their respective Googles,” I mean the country-specific versions of Google (such as google.co.uk, where searchers in the United Kingdom will typically begin a search).

You can duplicate your site, but it’s kind of pointless. Duplication does not give you an added boost; it gives you added cruft. It reduces your crawl budget if you have all that content on one domain. It can be expensive and often ineffective to host your site duplicated across multiple domains. There will be cannibalization.

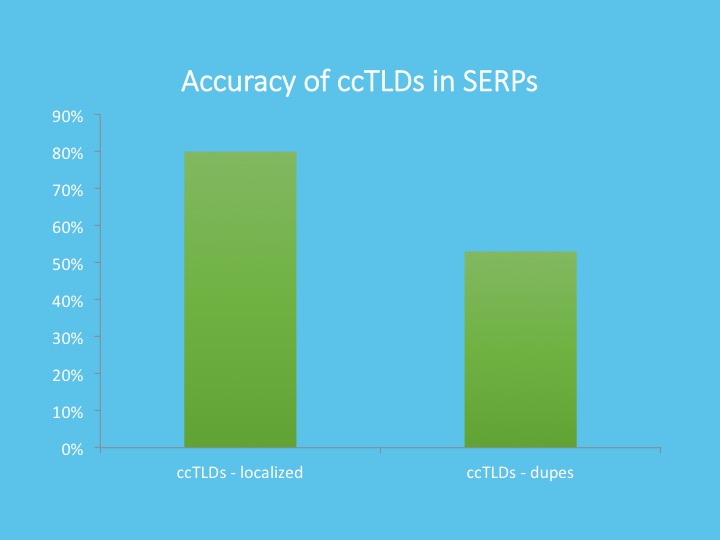

Often I’ll see a duplicate ccTLD get outranked by its .com sister in its local version of Google. For example, say a site like example.co.uk is a mirror of example.com, and the example.com outranks the example.co.uk site in google.co.uk. This is because geo-targeting is outweighed by the domain authority of the .com. We saw in an earlier chart that ccTLDs can be the most accurate for showing the right content in the right version of Google, but that’s because those sites had a good spread of link authority among each of their ccTLDs, as well as localized content.

There’s a big difference between the accuracy of ccTLDs when they’re localized and when they are dupes. I did some research using the SEMRush API, looking at 3 brands using ccTLDs in 26 country versions of Google, where the .com outranked the ccTLD 42 times. You shouldn’t just host your site mirrored across multiple ccTLDs just for the heck of it; it’s only effective if you can localize each one.

To sum it up: Avoid simply duplicating your site if you can. The more you can do to localize and differentiate your sites, the better.

Myth #4: Geo-targeting in Search Console will be enough for search engines to understand and rank my content correctly.

Geo-targeting your content is not enough. Like we covered in the last example, if you have two pages that are exactly the same and you geo-target them in Search Console, that doesn’t necessarily mean that those two pages will show up in the correct version of Google. Note that this doesn’t mean you should neglect geo-targeting in Google Search Console (or Bing or Yandex Webmaster Tools) — you should definitely use those options. However, search engines use a number of different clues to help them handle international content, and geo-targeting settings do not trump those other signals.

Search engines have revealed what some of the international ranking factors they use are. Here are some that have been confirmed:

- Translated content of the page

- Translated URLs

- Local links from ccTLDs

- NAP info — this could also include local currencies and links to Google My Business profiles

- Server location*

*Note that I included server location in this list, but with a caveat — we’ll talk more about that in a bit.

You need to take into account all of these factors, and not just some of them.

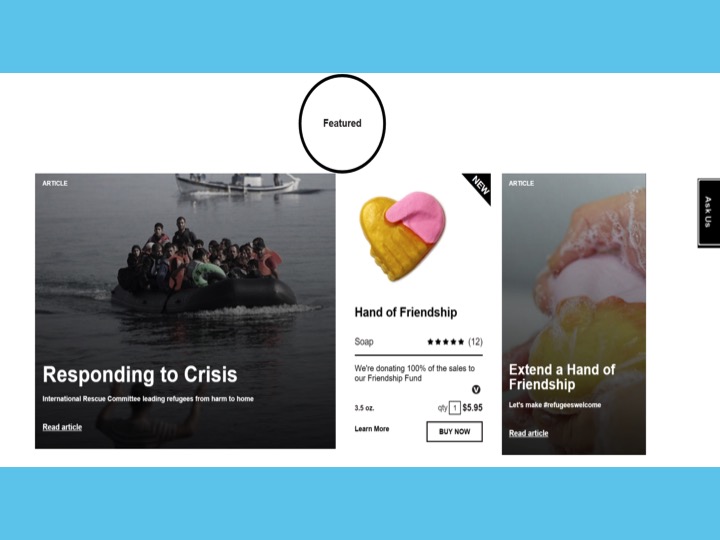

Myth #5: Why reinvent the wheel? There are multinational companies who have invested millions in R&D — just copy what they do.

The problem here is that large multinational companies don’t always prioritize SEO. They make SEO mistakes all the time. It’s a myth that you should look to Fortune 500 websites or top e-commerce websites to see how they structure their website— they don’t always get it right. Imitation may be the best form of flattery, but it shouldn’t replace careful thought.

Besides, what the multinational companies do in terms of site structure and SEO differs widely. So if you were to copy a large brand’s site structure, which should you copy? Apple, Amazon, TripAdvisor, Ikea…?

Myth #6: Using URL parameters to indicate language is OK.

Google recommends against this, and from my experience, it’s definitely best to avoid URL parameters to indicate language or region.

What this looks like in the wild is:

http://ift.tt/2a6Qud7

or

http://ift.tt/2a5eHOi

…where the target language or region of the page changes depending on the parameter. The problem is that parameters aren’t dependable. Sometimes they’ll be indexed, sometimes not. Search engines prefer unique URLs.

Myth #7: I can just proxy localized content into my existing URLs.

In this situation, a website will use the IP address or the Accept-Lang header of a user to detect their location or browser language preference, then change the content of the page based on that information. So the URL stays the same, but the content changes.

Google and Bing have clearly said they don’t like parameters and recommend keeping one language on one URL. Proxied content, content served by a cookie, and side-by-side translations all make it very problematic for search engines to index a page in one language. Search engines will appear to crawl from all over the world, so they’ll get conflicting messages about the content of a page.

Basically, you always want to have 1 URL = 1 version of a page.

Google has improved and will continue to improve its locale-aware crawling. As of early 2015, they announced that Googlebot will crawl from a number of IP addresses around the world, not just the US, and will use the Accept-Lang header to see if your website is locale-adaptive and changing the content of the page depending on the user. But in the same breath, they made it very clear this technology is not perfect, this does not replace the recommendation for using hreflang, and they still recommend you NOT use locale-adaptive content.

Myth #8: Adding hreflang tags will help my multinational content rank better.

Hreflang tags are one of the most powerful tools in the international SEO toolbox. They’re foundational to a successful international SEO strategy. However, they’re not meant to be a ranking factor. Instead, they’re intended to ensure the correct localized page is shown in the correct localized version of Google.

In order to get hreflang tags right, you have to follow the documentation exactly. With hreflang, there is no margin for error. Make sure to use the correct language (in ISO 639-1 format) and country codes (in ISO 3166-1 Alpha 2 format) when selecting the values for your hreflang tags.

Hreflang requirements:

- Exact ISO codes for language, and for language-country if you target by country

- Return tags

- Self-referential tags

- Point to correct URLs

- Include all URLs in an hreflang group

- Use page tags or XML sitemaps, preferably not both

- Use HTTP headers for PDFs, etc.

Be sure to check your Google Search Console data regularly to make sure no return tag errors or other errors have been found. A return tag error is when Page A has an hreflang tag that points to Page B, but Page B doesn’t have an hreflang tag pointing back to Page A. That means the entire hreflang association for that group of pages won’t work, and you’ll see return tag errors for those pages in Google Search Console.

Either the page tagging method or the XML hreflang sitemap method work well. For some sites, an XML sitemap can be advantageous because it eliminates the need for code bloat with page tags. Whichever implementation allows you to add hreflang tags programmatically is good. There are tools on the market to assist with page tagging, if you use one of the popular CMS platforms.

Here are some tools to help you with hreflang:

Myth #9: I can’t use a canonical tag on a page with hreflang tags.

When it comes to hreflang tags AND canonical tags, many eyes glaze over. This is where things get really confusing. I like to keep it super simple.

The simplest thing is to keep all your canonical tags self-referential. This is a standard SEO best practice anyways. Regardless of whether you have hreflang tags on a page, you should be implementing self-referential canonical tags.

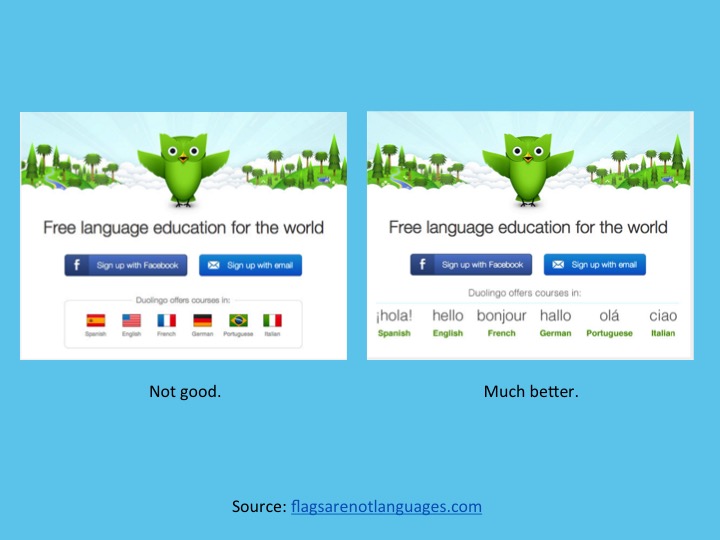

Myth #10: I can use flag icons on my site to indicate the site’s language.

Flags are not languages — there’s even a whole website dedicated to talking about this common myth: http://ift.tt/2a6QmKL. It has many examples of sites that mistakenly use flag icons to indicate languages.

For example, the UK’s Union Jack doesn’t represent all speakers of English in the world. Thanks to the course of history, there are at least 101 countries in the world where English is a common tongue. A flag of a country to represent speakers of a language is very off-putting for any users who speak the language but aren’t from that country.

Here’s an example where flag icons are used to indicate language. A better (and more creative) approach is to replace the flag icons with localized greetings:

If you have a multi-lingual site(s), you should not use flags to represent language. Instead, use the name of the language, written in the local language. English should be “English,” Spanish should be “Español,” German should be “Deutsch,” etc. You’d be surprised how many websites forget to use localized language or country spellings.

Myth #11: I can get away with automated translations.

The technology for automated translations or machine translations has been improving in recent years, but it’s still better to avoid automated translations, especially machine translation that involves no human editing.

Automatic translations can be inaccurate and off-putting. They can hurt a website trying to rank in a competitive landscape. A great way to get an edge on your competitors is to use professional, high-quality native translators to localize your content into your target languages. High-quality localization is one of the key factors in improving your rankings when it comes to international SEO.

If you have a very large amount of content that you cannot afford to translate, choose some of the most important content for human translation, such as your main category and product pages.

Myth #12: Whichever site layout and user experience works best in our core markets should be rolled out across all our markets.

This is something I’ve seen happen on many, many sites, and it was part of the reason why eBay failed in China.

Porter Erisman tells the story in his book Alibaba’s World, which I highly recommend. He spoke of how, when eBay and Alibaba were duking it out in China, eBay made the decision to apply its Western UX principles to its Chinese site.

In Alibaba’s World, Erisman writes about how eBay “eliminated localized features and functions that Chinese Internet users enjoyed and forced them to use the same platform that had been popular in the US and Germany. Most likely, eBay executives figured that because the platform had thrived in more industrialized markets, its technology and functionality must be superior to a platform from a developing country.

“Chinese users preferred Alibaba’s Taobao platform over eBay, because it had an interface that Chinese users were used to – cute icons, flashing animations, and had a chat feature that connected customers with sellers. In the West, bidding starts low and ends high, but Chinese users preferred to haggle with sellers, who would start their bids high and end low.”

From this story, you can tell how localization — in terms of site design, UX, and holistic business strategy — can be of tantamount importance.

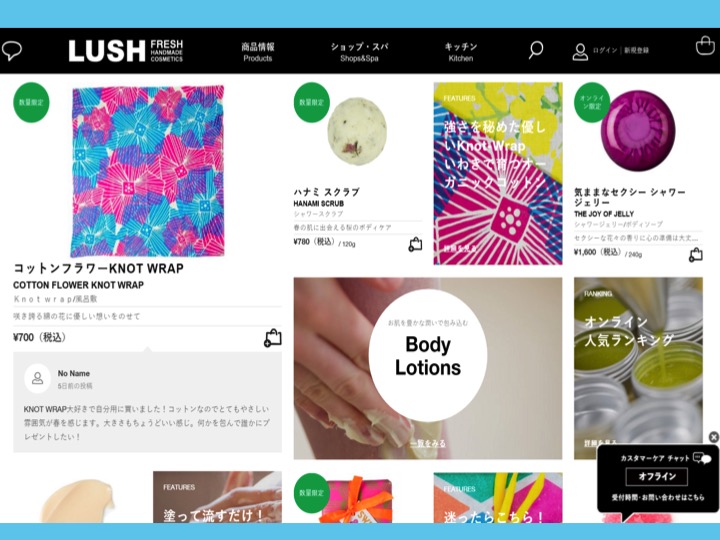

Here is an example of Lush’s Japanese site, which has bright colors, a lot going on, and it’s almost completely localized into Japanese. Also notice the chat box in the bottom right:

Now compare that to the Lush USA site. There’s a lot more white space here, fewer tiles, and the chat box is only a small button on the right sidebar.

They’ve taken the effort to adjust layout according to how they want to express their brand to each market, rather than just replacing tiles in the same CMS layout with localized tiles. Yet, in both markets they have many elements that are similar, too. They’re a good example of keeping a unified global brand while leaving plenty of room for local expression.

The key to success internationally is localizing your online presence while at the same time having a unified global brand. From an SEO perspective, you should make sure there’s a logical organization to your global URLs so that localized content can be geo-targeted by subdirectory, subdomain, or domain. You should focus on getting hreflang tags right, etc. But you should also work with a content strategy team to make sure that there will be room for trans-creation of content, as well as with a UX design team to make sure that localized content can be showcased appropriately.

Design, UX, site architecture — all of these things play increasingly important roles in SEO. By localizing your design, you’re reducing duplicate content and you’re potentially improving your site engagement metrics (and by corollary, your clickstream data).

Things that an SEO definitely wants to localize are:

- URLs

- Meta titles & descriptions

- Navigation labels

- Headings

- Image file names, internal anchor text, & alt text

- Body content

Make sure to focus on keyword variations between countries, even within the same language. For example, there are differences in names and spellings for many things in the UK versus the US. A travel agency might describe their tours to a British audience as “tailor-made, bespoke holidays,” while they would tell their American audience they sell “customized vacation packages.”

If you used the same keywords to target all countries that share a common tongue, you’d be losing out on the ability to choose the best keywords for each country. Take this into account when considering your keyword optimization.

Myth #13: We can just use IP sniffing and auto-redirect users to the right place. We don’t need hreflang tags or any type of geo-targeting.

A lot of sites use some form of automatic redirection, detecting the user’s IP address and redirecting them to another website or to a different page on their site that’s localized for their region. Another common practice is to use the Accept-Language header to detect the user’s browser language preference, redirecting users to localized content that way.

However, Google recommends against automatic redirection. It can be inaccurate, can prevent users and search engines from indexing your whole site, and can be frustrating for users when they’re redirected to a page they don’t want. In fact, hreflang annotations, when correctly added to all your localized content and correctly cross-referenced, should eliminate or greatly reduce the need for any auto-redirection. You should avoid automatic redirection as much as possible.

Here are all the reasons (that I can think of) why you shouldn’t do automatic redirection:

- User agents like Googlebot may have a hard time reading all versions of your page if you keep redirecting them.

- IP detection can be inaccurate.

- Multiple countries can have multiple official languages.

- Multiple languages can be official in multiple countries.

- Server load time can be negatively affected by having to add in all these redirects.

- Shared computers between spouses, children, etc., could have different language preferences.

- Expats and travelers may try to access a website that assumes they’re locals, making it frustrating for the users to switch languages.

- Internet cafes, hotel computer centers, and school computer labs may have diverse users.

- The user prefers to browse in one language, but make transactions in another. For example, many citizens are fluent in English, and will search in English if they think they can get better results that way. But when it comes to the checkout process, especially when reading legalese, they will prefer to switch to their native language.

- A person sends a link to a friend, but that friend lives in a different place, and can’t see the same thing as her friend sees.

Instead, a much better user experience is to provide a small, unobtrusive banner that appears when you detect a user may find another portion of your site more relevant. TripAdvisor and Amazon do a great job of this. Here’s an image from Google Webmaster Blog that exemplifies how to do this well:

One exception to the never-use-auto-redirection rule is that, when a user selects a country and/or language preference on your site, you should store that preference in a cookie and redirect the user to their preferred locale whenever they visit your site in the future. Make sure that they can set a new preference any time, which will re-set the cookie preference.

On that note, also always make sure to have a country and/or language selector on your website that’s located on every page and is easy for users to see and for search engine bots to crawl.

Myth #14: I need local servers to host my global content.

Many website owners believe they need local servers in order to rank well abroad. This is because Google and Bing clearly stated that local servers were an important international ranking factor in the past.

However, Google confirmed last year that local server signals are not as important as they once were. With the rise in popularity of CDNs, local servers are generally not necessary. You definitely need a local server for hosting sites in China, and it may be useful in some other markets like Japan. It’s always good to experiment. But as a general rule, what you need is a good CDN that will serve up content to your target markets quickly.

Myth #15: I can’t have multi-country targeted content that’s all in the same language, because then I’d incur a duplicate content penalty.

This myth is born from an underlying fear of duplicate content. Something like 30% of the web contains some dupe content (according to a recent RavenTools study). Duplicate content is a fact of life on the web. You have to do something spammy with that duplicate content, such as create doorway pages or scrape content, in order to incur a penalty.

Geo-targeted, localized content is not spammy or manipulative. There are valid business reasons for wanting to have very similar content geared for different users around the world. Matt Cutts confirmed that you will not incur a penalty for having similar content across multiple ccTLDs.

The reality is, you CAN have multi-country targeted content in the same language. It’s just that you need to combine hreflang tags + localization in order to get it right. Here are some ways to avoid duplicate content problems:

- Use hreflang tags

- Localized keyword optimization

- Adding in local info such as telephone numbers, currencies, addresses in schema markup, and Google My Business profiles

- Localized HTML sitemaps

- Localized navigation and home page features that cater to specific audiences.

- Localized images that resonate with the audience. American football, for example, is not very popular outside the US. Also, be mindful of holidays around the world and of current events.

- Transcreated content (where you take an idea and tailor it for a specific locale), rather than translation (which is more word-for-word than concept-for-concept)

- Obtain links from local ccTLDs pointing to your localized content

As you can see, there are many common myths surrounding international SEO, but hopefully you’ve gained some clarity and feel better equipped to build a great global site strategy. I believe international SEO will continue to be of growing interest, as globalization is a continuing trend. Cross-border e-commerce is booming — Facebook and Google are looking at emerging markets in Africa, India, and Southeast Asia, where more and more people are going online and getting comfortable buying online.

International SEO is ripe for optimization — so you, as SEO experts, are in a very good position if you understand how to set your website up for international SEO success.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don’t have time to hunt down but want to read!